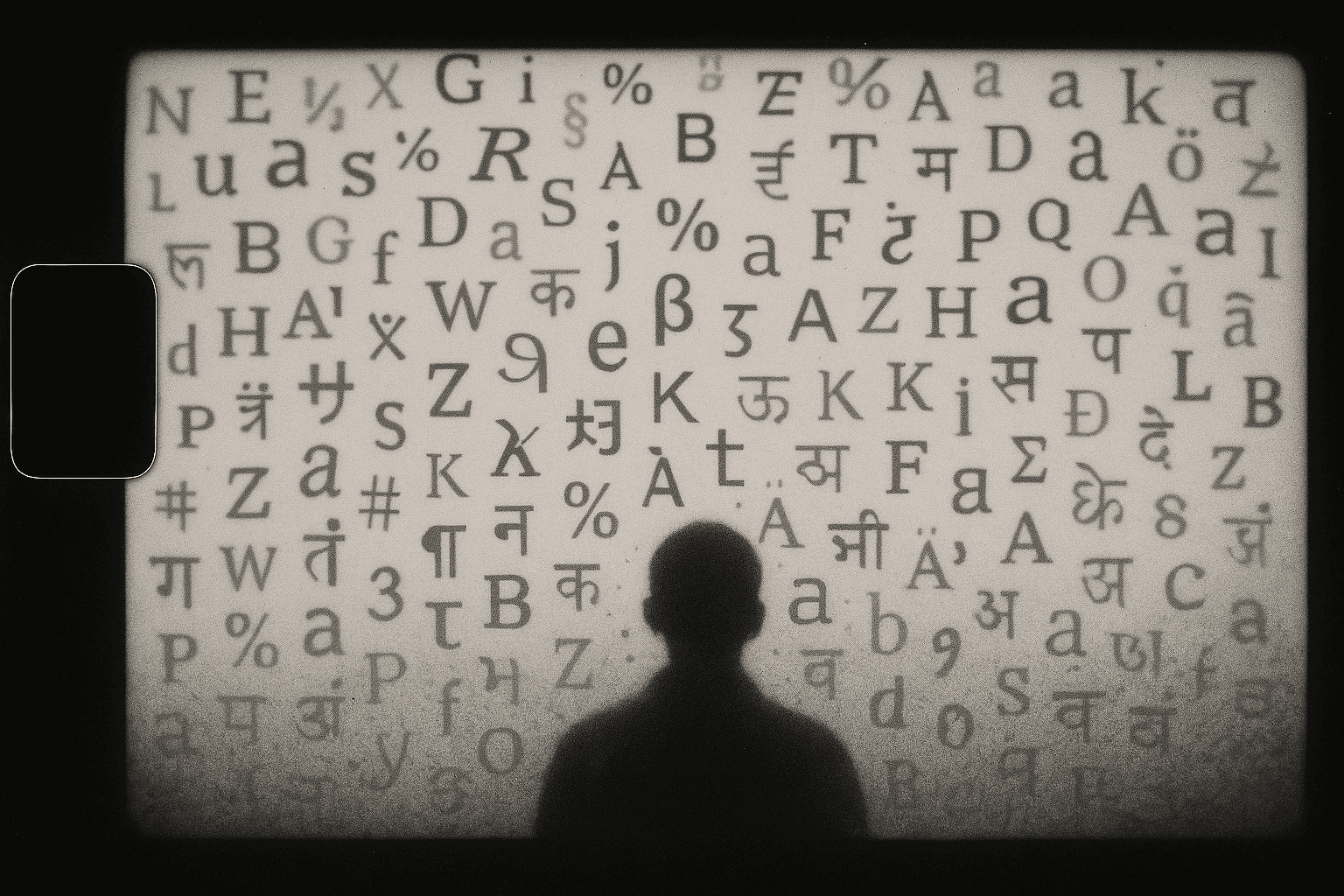

The Natural Language

Multilingual vs. translated model.

Context is King.

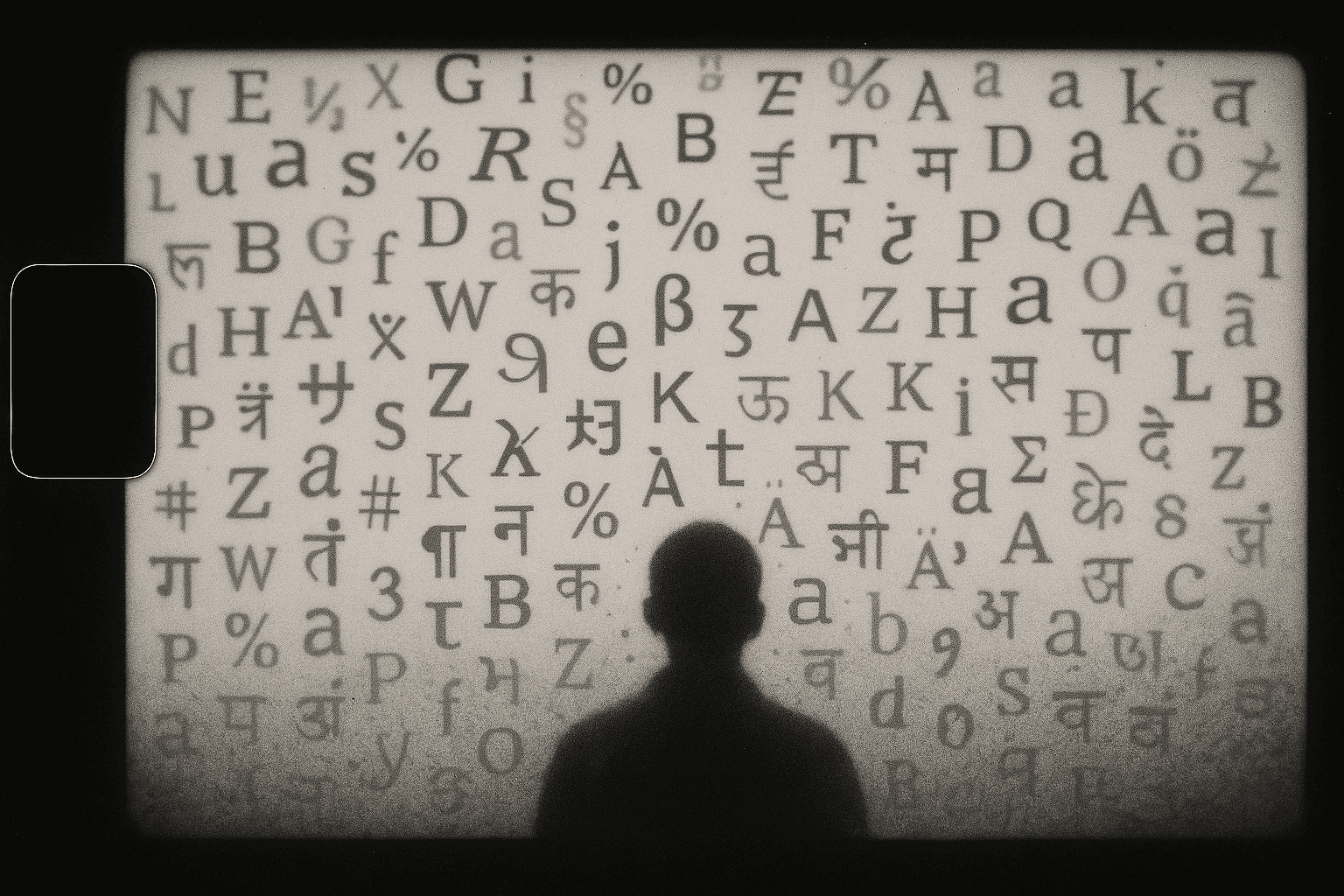

The Natural Language

Multilingual vs. translated model.

Context is King.

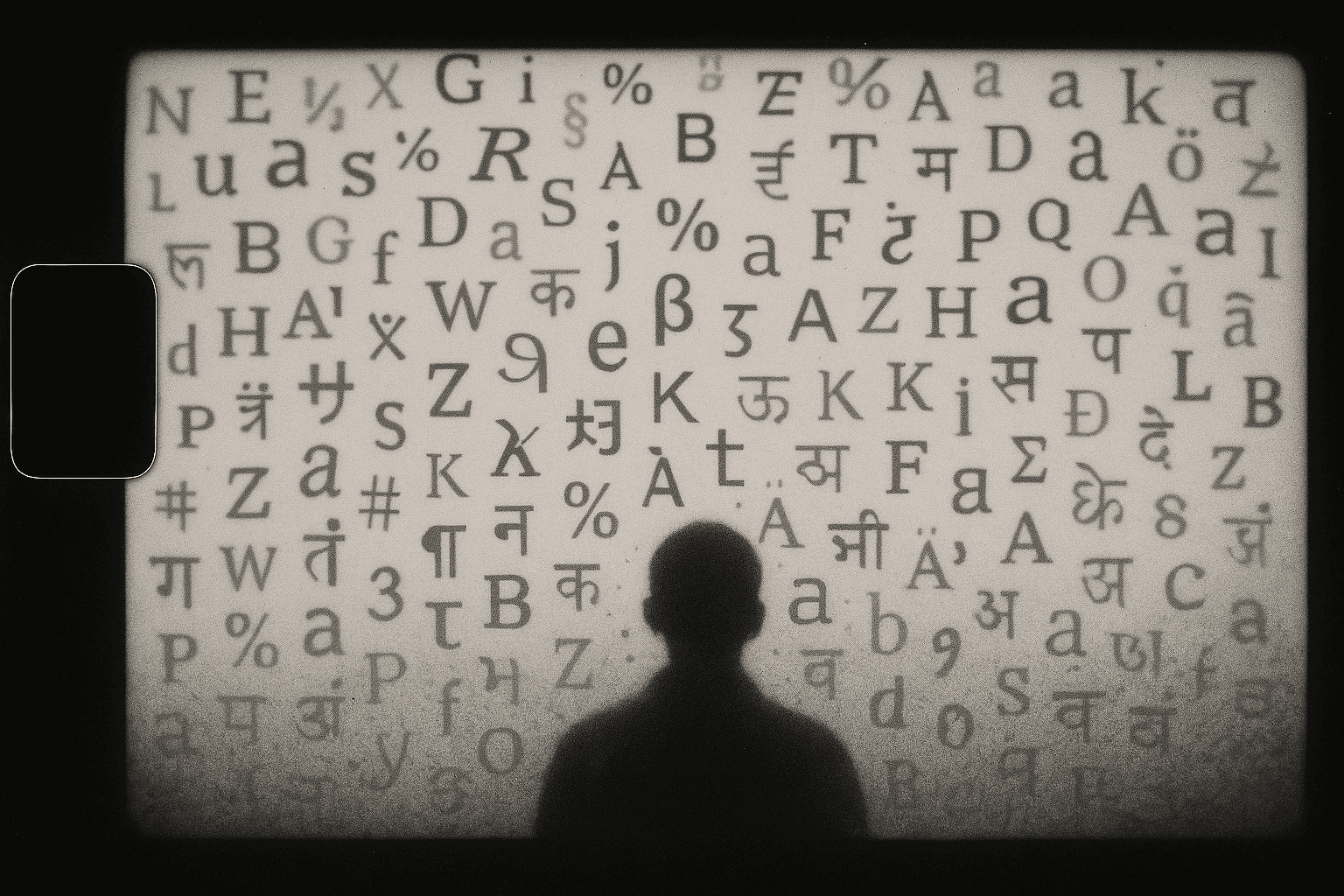

The Natural Language

Multilingual vs. translated model.

Context is King.

This episode will cover:

What it means for a model to “think” in a language

Multilingual-native vs. translation-first pipelines

Why tone, idiom, and social context matter more than literal words

The hidden costs of poor tokenization and weak alignment

Who gets left behind if context is stripped away

Natural language here is not “English vs. everything else.”

It is the language the model can think in without translation. A multilingual model learns many languages directly, with their grammar, idiom, and social cues. A translated model mostly thinks in English, then leans on translation in and out. One reasons in-language. The other reasons around it.

Translation is a bridge. It is not the river. We keep forgetting that. When a model is trained to think in your language, it carries your context. Politeness levels. Code-switching. Dialect. The way a joke lands in Lagos and misses in London. A translated model can miss all of that. It brings words across. It leaves texture behind.

Context is king because language is social. “Have you eaten” in some cultures is a greeting, not a question. Honorifics in Korean and Japanese are not style, they are ethics. Arabic dialects shift by street and by screen. If the model’s “native” context is English, it will flatten these choices. That shows up as awkward tone, wrong register, or advice that feels off even when the grammar is fine.

There is also the less visible layer: tokens and cost. Some languages break into more tokens than others, which means the same sentence can be slower and more expensive. That can push entire communities to the margins, even when the model officially “supports” their language.

Safety depends on context too. A system aligned in English can still miss harmful prompts in other languages, dialects, or slang. Jailbreaks change shape when the language changes. This cannot be patched with a dictionary alone. Models need to be trained in those contexts, then judged in those contexts.

So how do we choose in practice?

If the users speak many languages, favor multilingual-native models, not translate-then-reason stacks.

Test each language with native speakers, not translations.

Watch register, tone, and dialect in user studies, not just accuracy.

Track token budgets by language. Cost is a form of exclusion.

Align safety per language, not as an English blanket.

The point is not purity. It is care. Translation will always have a place. But if we want systems that feel natural, we need models that think where people live. Context is not garnish. Context is the meal.

This episode will cover:

What it means for a model to “think” in a language

Multilingual-native vs. translation-first pipelines

Why tone, idiom, and social context matter more than literal words

The hidden costs of poor tokenization and weak alignment

Who gets left behind if context is stripped away

Natural language here is not “English vs. everything else.”

It is the language the model can think in without translation. A multilingual model learns many languages directly, with their grammar, idiom, and social cues. A translated model mostly thinks in English, then leans on translation in and out. One reasons in-language. The other reasons around it.

Translation is a bridge. It is not the river. We keep forgetting that. When a model is trained to think in your language, it carries your context. Politeness levels. Code-switching. Dialect. The way a joke lands in Lagos and misses in London. A translated model can miss all of that. It brings words across. It leaves texture behind.

Context is king because language is social. “Have you eaten” in some cultures is a greeting, not a question. Honorifics in Korean and Japanese are not style, they are ethics. Arabic dialects shift by street and by screen. If the model’s “native” context is English, it will flatten these choices. That shows up as awkward tone, wrong register, or advice that feels off even when the grammar is fine.

There is also the less visible layer: tokens and cost. Some languages break into more tokens than others, which means the same sentence can be slower and more expensive. That can push entire communities to the margins, even when the model officially “supports” their language.

Safety depends on context too. A system aligned in English can still miss harmful prompts in other languages, dialects, or slang. Jailbreaks change shape when the language changes. This cannot be patched with a dictionary alone. Models need to be trained in those contexts, then judged in those contexts.

So how do we choose in practice?

If the users speak many languages, favor multilingual-native models, not translate-then-reason stacks.

Test each language with native speakers, not translations.

Watch register, tone, and dialect in user studies, not just accuracy.

Track token budgets by language. Cost is a form of exclusion.

Align safety per language, not as an English blanket.

The point is not purity. It is care. Translation will always have a place. But if we want systems that feel natural, we need models that think where people live. Context is not garnish. Context is the meal.

This episode will cover:

What it means for a model to “think” in a language

Multilingual-native vs. translation-first pipelines

Why tone, idiom, and social context matter more than literal words

The hidden costs of poor tokenization and weak alignment

Who gets left behind if context is stripped away

Natural language here is not “English vs. everything else.”

It is the language the model can think in without translation. A multilingual model learns many languages directly, with their grammar, idiom, and social cues. A translated model mostly thinks in English, then leans on translation in and out. One reasons in-language. The other reasons around it.

Translation is a bridge. It is not the river. We keep forgetting that. When a model is trained to think in your language, it carries your context. Politeness levels. Code-switching. Dialect. The way a joke lands in Lagos and misses in London. A translated model can miss all of that. It brings words across. It leaves texture behind.

Context is king because language is social. “Have you eaten” in some cultures is a greeting, not a question. Honorifics in Korean and Japanese are not style, they are ethics. Arabic dialects shift by street and by screen. If the model’s “native” context is English, it will flatten these choices. That shows up as awkward tone, wrong register, or advice that feels off even when the grammar is fine.

There is also the less visible layer: tokens and cost. Some languages break into more tokens than others, which means the same sentence can be slower and more expensive. That can push entire communities to the margins, even when the model officially “supports” their language.

Safety depends on context too. A system aligned in English can still miss harmful prompts in other languages, dialects, or slang. Jailbreaks change shape when the language changes. This cannot be patched with a dictionary alone. Models need to be trained in those contexts, then judged in those contexts.

So how do we choose in practice?

If the users speak many languages, favor multilingual-native models, not translate-then-reason stacks.

Test each language with native speakers, not translations.

Watch register, tone, and dialect in user studies, not just accuracy.

Track token budgets by language. Cost is a form of exclusion.

Align safety per language, not as an English blanket.

The point is not purity. It is care. Translation will always have a place. But if we want systems that feel natural, we need models that think where people live. Context is not garnish. Context is the meal.